Post by nic on Oct 24, 2019 15:19:08 GMT

Imagine you commute to work on a bus. This bus runs every 5 minutes so when you arrive at the bus stop you will be waiting up to 5 minutes for the next bus.

Now, let's say that when you get to the stop, there is a taxi waiting just for you, ready to go immediately. Taking the taxi will get you to work on time where if you took the bus, you would arrive up to 5 minutes later and possibly after business opening time.

Taking the taxi is how MidiFire's zero latency hosting works. Here is that in technical detail.

In a normal hosting app, chunks of MIDI are processed according to a 'render cycle'. This cycle depends upon the sample rate and the size of each chunk. A host running at 48Khz sample rate and processing (typical) 256 byte sized chunks means an incoming MIDI event will get delayed by up to 5.33ms. If the chunk size (audio buffer size) is larger, this delay is longer (and remember the delay is not consistent).

MidiFire's zero latency hosting pushes every event received to the plugin immediately, just like a built-in module. Thus, we shave off that variable latency.

How significant is this saving? Take a hi hat track that you are channel mapping inside an AU plugin. With variable latency it will be noticeably wonky. No latency = solid. Many players will notice latency so reducing it makes live playing more responsive and fluid.

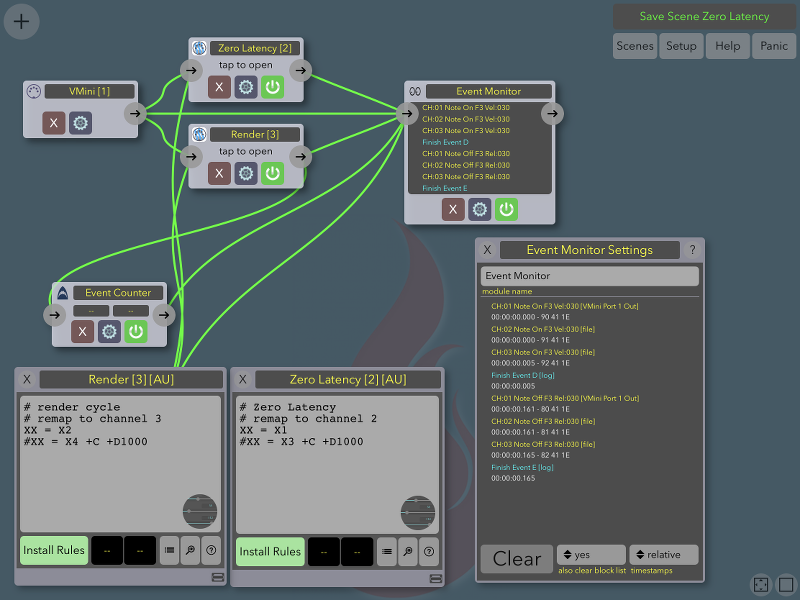

To demonstrate further, take the following MidiFire scene:

Explanation:

- The input device (vMini [1]) is a MIDI keyboard controller, sending events on channel 1.

- The vMini is routed directly to an Event Monitor and two StreamByter AUs in parallel.

- The top StreamByter (Zero Latency [2]) is configured with zero latency and remaps the channel to 2

- The bottom StreamByter (Render [3]) is configured without zero latency and remaps the channel to 3

- Both Stream Byters are connected to the Event Monitor and you can see the code for each in the two panels bottom left.

- The Event Monitor is configured to show timestamps in 'relative' mode, so the first event received is at time 00:00:00

- A third (built-in) StreamByter (Event Counter) sends a LOG message to the Event Monitor to mark the end of the event processing.

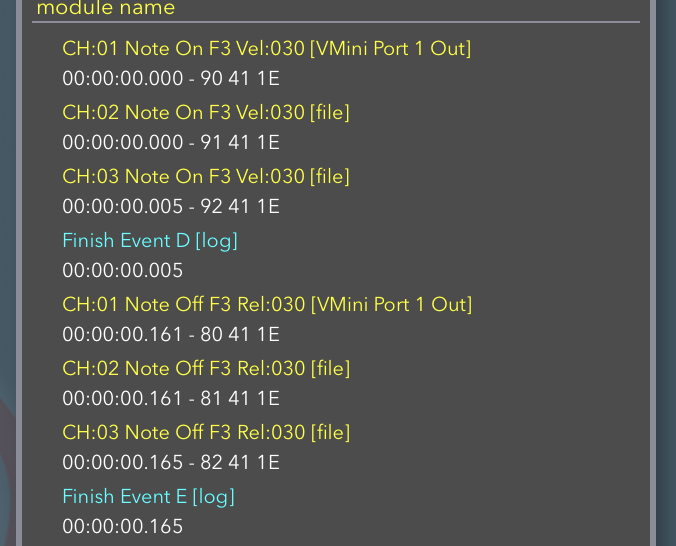

Now, examine the event monitor in detail:

Original events will be on channel 1, zero latency events on channel 2 and render events on channel 3.

I played a single note which generates a note on and note off event. The notes are separated by the blue log message to make it easier to distinguish between them.

You will notice that the timestamps on the events on channel 1 and 2 are identical, so there is no difference between them.

The timestamps on the events on channel 3, are 4 or 5 ms later than the other two. These events went though the commuter bus and arrived (variably) later than the incoming event.

Even if a number of plugins are connected in series, the event will pass immediately between each plugin.

Now, let's say that when you get to the stop, there is a taxi waiting just for you, ready to go immediately. Taking the taxi will get you to work on time where if you took the bus, you would arrive up to 5 minutes later and possibly after business opening time.

Taking the taxi is how MidiFire's zero latency hosting works. Here is that in technical detail.

In a normal hosting app, chunks of MIDI are processed according to a 'render cycle'. This cycle depends upon the sample rate and the size of each chunk. A host running at 48Khz sample rate and processing (typical) 256 byte sized chunks means an incoming MIDI event will get delayed by up to 5.33ms. If the chunk size (audio buffer size) is larger, this delay is longer (and remember the delay is not consistent).

MidiFire's zero latency hosting pushes every event received to the plugin immediately, just like a built-in module. Thus, we shave off that variable latency.

How significant is this saving? Take a hi hat track that you are channel mapping inside an AU plugin. With variable latency it will be noticeably wonky. No latency = solid. Many players will notice latency so reducing it makes live playing more responsive and fluid.

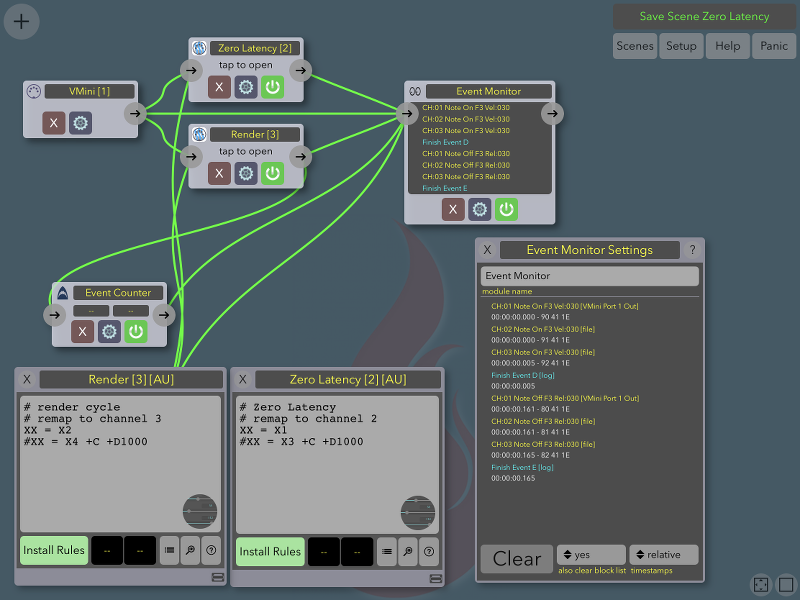

To demonstrate further, take the following MidiFire scene:

Explanation:

- The input device (vMini [1]) is a MIDI keyboard controller, sending events on channel 1.

- The vMini is routed directly to an Event Monitor and two StreamByter AUs in parallel.

- The top StreamByter (Zero Latency [2]) is configured with zero latency and remaps the channel to 2

- The bottom StreamByter (Render [3]) is configured without zero latency and remaps the channel to 3

- Both Stream Byters are connected to the Event Monitor and you can see the code for each in the two panels bottom left.

- The Event Monitor is configured to show timestamps in 'relative' mode, so the first event received is at time 00:00:00

- A third (built-in) StreamByter (Event Counter) sends a LOG message to the Event Monitor to mark the end of the event processing.

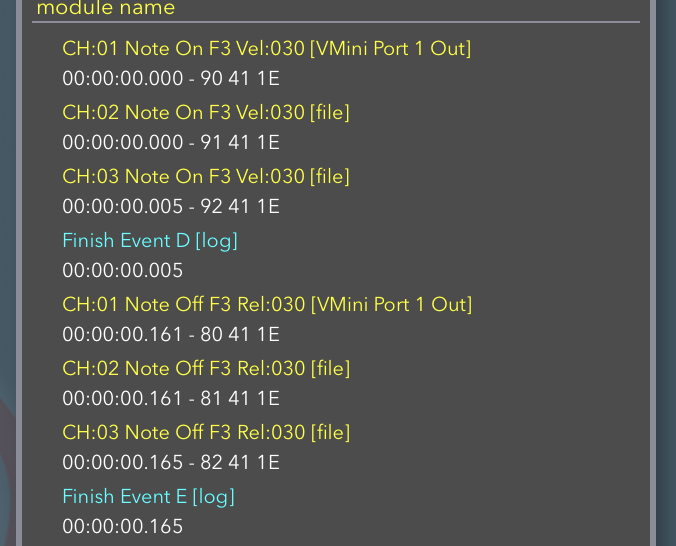

Now, examine the event monitor in detail:

Original events will be on channel 1, zero latency events on channel 2 and render events on channel 3.

I played a single note which generates a note on and note off event. The notes are separated by the blue log message to make it easier to distinguish between them.

You will notice that the timestamps on the events on channel 1 and 2 are identical, so there is no difference between them.

The timestamps on the events on channel 3, are 4 or 5 ms later than the other two. These events went though the commuter bus and arrived (variably) later than the incoming event.

Even if a number of plugins are connected in series, the event will pass immediately between each plugin.